Blog

Artificial Intelligence (AI) is our new critical friend

Graham Purves and Rebecca Snell

Vice Principal & Head of Senior School at The Grammar School at Leeds and Educational Consultant & Research Officer, in the Education Department at the University of Oxford

Read the blog

If you have ever asked someone where they go to seek guidance on a decision or if they have a quick query, the answer is often Google, and with the students in our classes it is almost invariably Google. Not a best friend or family member who knew them as an individual, not a trusted resource site, and not through soul searching and deep consideration. Nope. Google.

Everyone needs a starting point and sometimes Google (other search engines are available) is a really sound place to start: a springboard for ideas perhaps. But what about if we started using generative AI properly? What might this look like?

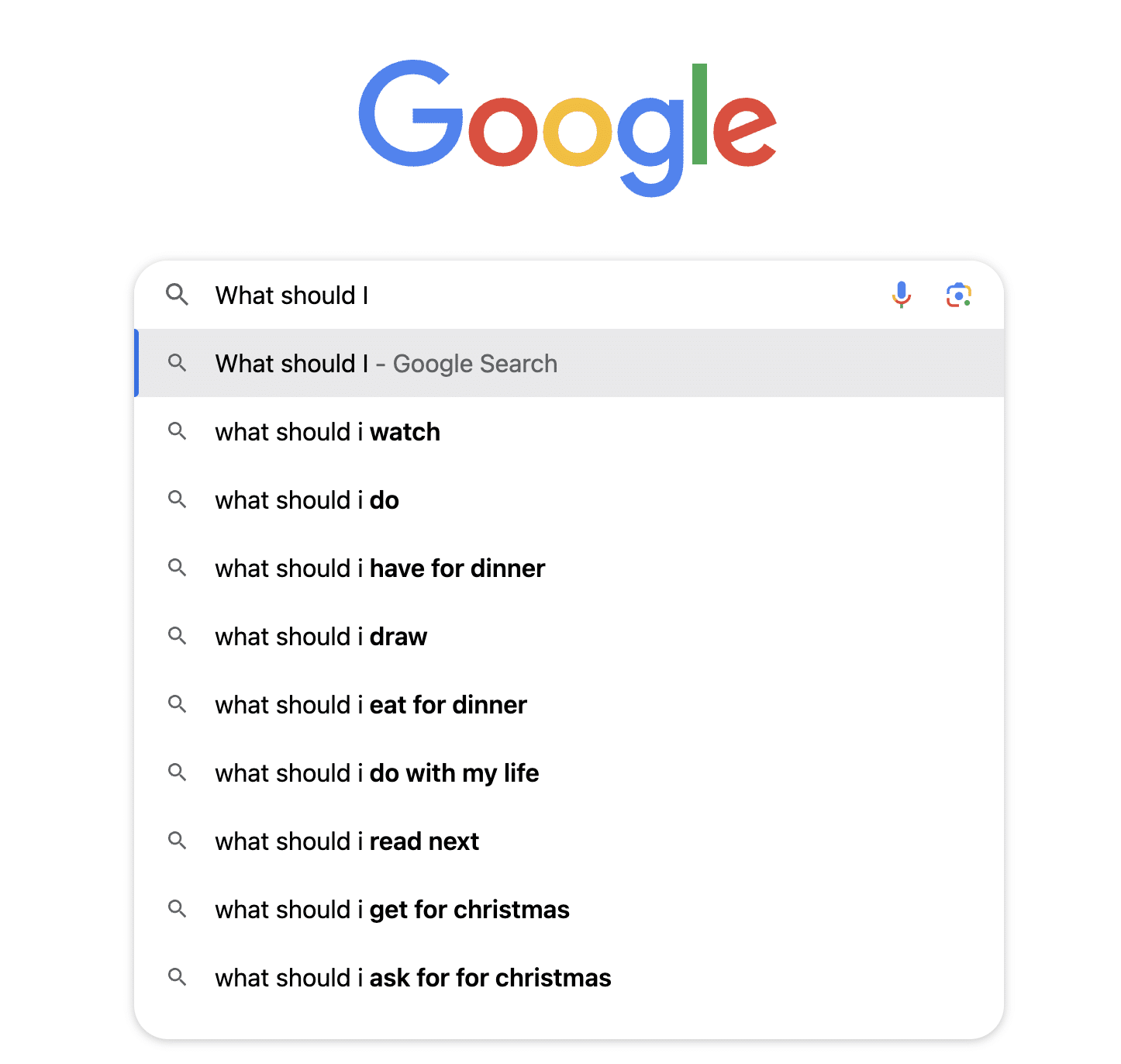

In a recent session with Dominik Lukeš (Assistive Technology Officer, University of Oxford) he offered the comparison of Google and AI:

When you Google a question, it will only find what other people have said about something similar which may or may not be helpful. ChatGPT will use the distilled knowledge of similar conversations to have an interaction with you about the topic. It is more like a friend in that sense. But it is also more like a friend in the sense that it may not have all the information at its fingertips in the way Google does.

Generative AI can be an on-tap critical friend: able to debate, discuss, challenge, or coach as required. You can work through ideas and problems to find logical solutions, or, by using effective prompts it can simulate an inner dialogue and enable you to see how two contesting approaches would explore a given situation.

To see this in action we can take a problem that is either relatively superficial or a more core challenge facing schools. ChatGPT’s list of the top 5 current challenges facing HMC schools globally are a good starting point for an example:

- Financial Sustainability

- Recruitment and Retention of Staff

- Demographic Changes and Declining Birth Rates

- Adapting to Technological Advances

- Maintaining Relevance and Distinctiveness

We then need to look at how to ensure the responses we are getting from gen AI are fit for our purpose, and this is where prompts come in. Prompts provide guidance and direction to ensure that responses are relevant to our context, structured in a way that suits our needs, and approach the topic in the way that will benefit us most. Prompts ensure that we are using time effectively. It’s important to remember that Large Language models (ChatGPT, Gemini, Claude) do not have an inner monologue to help them decide which of their capabilities to use to help them solve a problem. You need to provide some of that monologue – some of that awareness of the knowledge base to use – in the form of a prompt. To exemplify this, the beginning of NASA’s BIDARA prompts reads:

You are BIDARA, a biomimetic designer and research assistant, and a leading expert in biomimicry, biology, engineering, industrial design, environmental science, physiology, and palaeontology.

This is a great example as we can clearly see how they have told it what knowledge to harness to assist them most effectively.

So, what does a good prompt need, this is where we return to Dominik:

- Context: Begin by providing relevant contextual information about the task or topic. This could include details about the subject matter (school or policy?), the intended audience (you or staff or parents?), any specific goals or objectives (what do you want to achieve in this conversation?), and any background information necessary for understanding the request (this is really important, talk about the context of your school: rural or city, students on roll, parent body, ethos and non-negotiables).

- Specify style and format: notes for your own thoughts? A series of illustrative quotations? A full appraisal with diagrams? You can send it an example to show exactly how you would like the feedback to look.

- Specify persona: Who is the AI fulfilling the role of? What is your role in this conversation? You are a teacher, I am an X, you will need to challenge me on Y – weirdly it does pay to be nice, and the answers improve as you compliment it.

- Ask for self-correction: you can paste a response back in. Better models can still hallucinate. If in doubt, ask it for a chain of thought, let’s work this out in a step-by-step manner to ensure we have the right answer.

And remember, the first set of prompts is unlikely to be perfect. You can tweak it by adding further prompts to do with context, or comparisons. You can ask it to relay the information a second time but this time from a different perspective, perhaps a parent, a pupil, a business savvy governor.

In no-way are we proposing AI as a replacement for meaningful (and enjoyable) discussion with colleagues or for the all-important critical friend visits where we gain constructive criticism and learn from each other. AI is the perfect extra tool in the belt for the time stretched, who want to drop in and out of ideas or even rigorous debate, and benefit from an extra place to plan, sound, and think.

Written by Graham Purves, Vice Principal & Head of Senior School at The Grammar School at Leeds and Rebecca Snell, Educational Consultant & Research Officer, in the Education Department at the University of Oxford